Tests#

Test is an execution of a particular test case implementation at a particular time. Optionally and if applicable, test can be associated with one or multiple test sessions and vice versa.

Test session is a group of test interactions with an application under test that take place within a given time frame. Selenium WebDriver session is an example of test session, however in Zebrunner, you are not limited to WebDriver sessions only: you can define your own sessions according to your testing needs (e.g. Smart TV apps testing, gaming console apps, etc.)

Test statuses#

Test can have one of the following statuses:

| Status | Definition |

|---|---|

| IN PROGRESS | Indicates that a test is currently being executed |

| PASSED | Indicates that a test execution was successful and no assertions failed |

| FAILED | Indicates that a test execution failed (due to runtime error or failed assertions) |

| SKIPPED | Indicates that a test was planned for execution, but it never happened due to some reason (e.g. a dependent test failed) |

| ABORTED | Indicates that a test was interrupted by a user or system (e.g. on timeout) |

Test attributes#

Additionally, test may have the following optional attributes and associations:

- Test sessions (see the definition above)

- Test case references

- Linked issue reference

- Logs

- Screenshots

- Labels

- Stability score

This guide provides an overview of actions on tests and sessions and key Zebrunner capabilities for results analysis.

Working with tests#

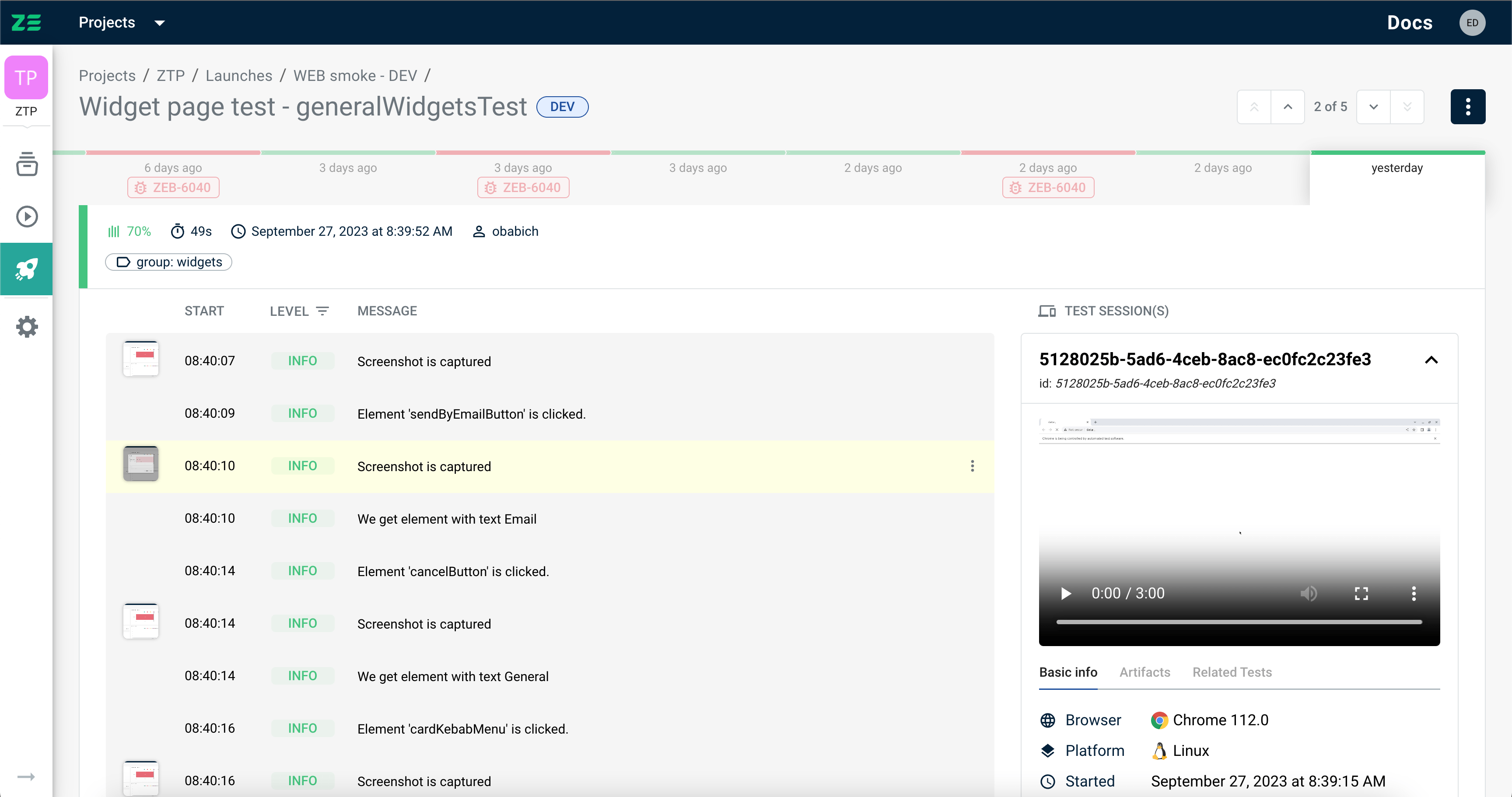

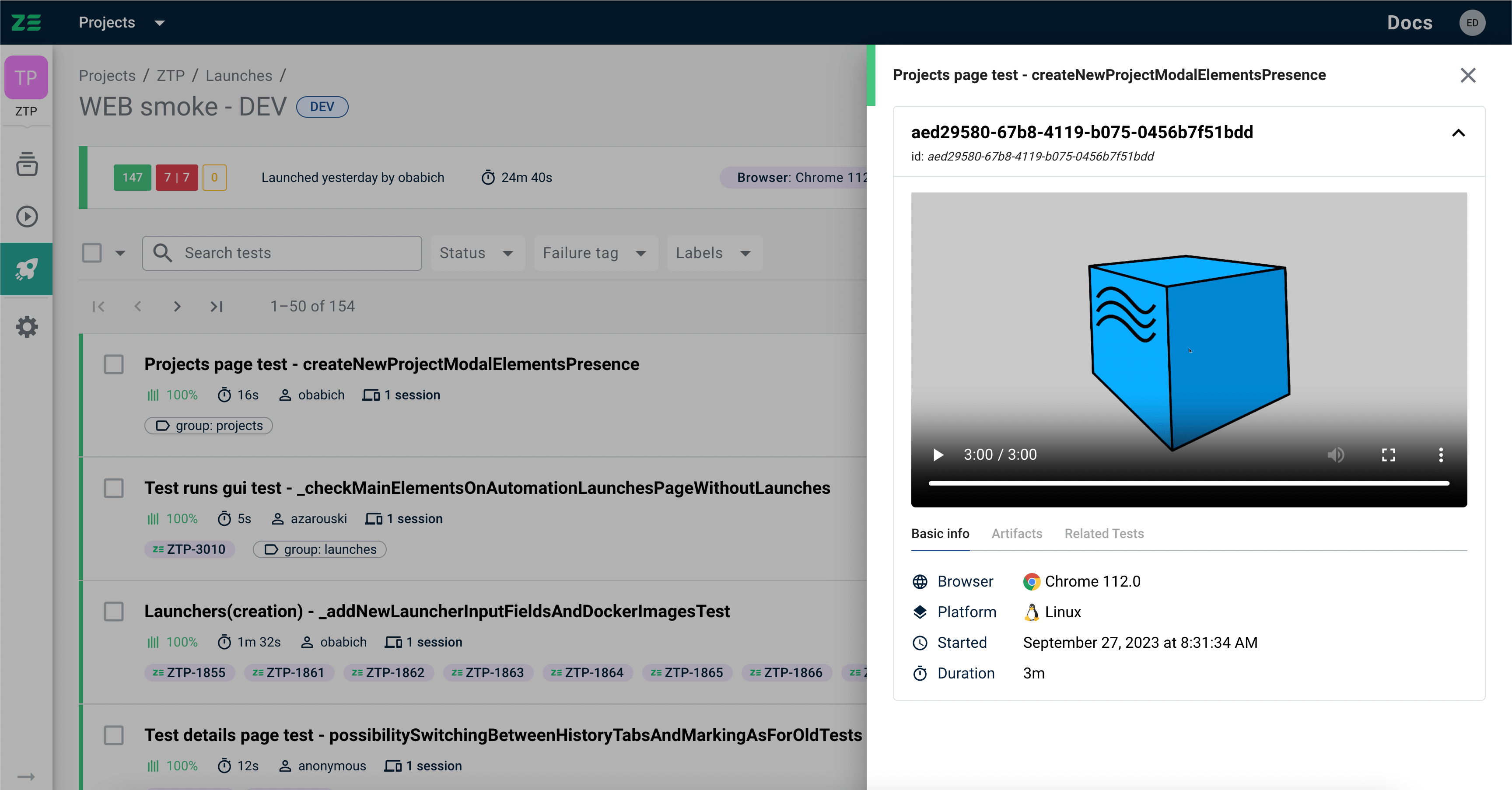

Most of the actions on tests and test sessions can be performed from 2 main views — Launch view (can be accessed from Launches view by opening any launch) and Test view (can be accessed from Launch view by opening any test).

Browsing sessions#

For every test session, such information as the browser version, platform name, device name, logs (can be downloaded as a separate file), video recordings are usually attached. Moreover, Zebrunner applies the mechanism of mapping tests and test sessions, and they relate to each other as many to many:

- One test may use multiple sessions (e.g. if 2-3 browsers were started during a test) — in this case, all the test sessions with their attributes will be displayed for a test both on the Launch and Test views.

- Multiple tests can be executed within one session (e.g. if within one session on browser or device, several tests were covered) — in this case, all the tests linked with this test session will be displayed under its attributes.

To access the artifacts of a test session (if any is related to a test) from the Launch view, where grid of tests related to the launch displayed, click on the ‘X session(s)’ link on the needed test.

A semi-page will be opened, displaying all the available information for a certain test session(s) and related tests if any.

The same information is also available on the Test view and is displayed right after opening the page.

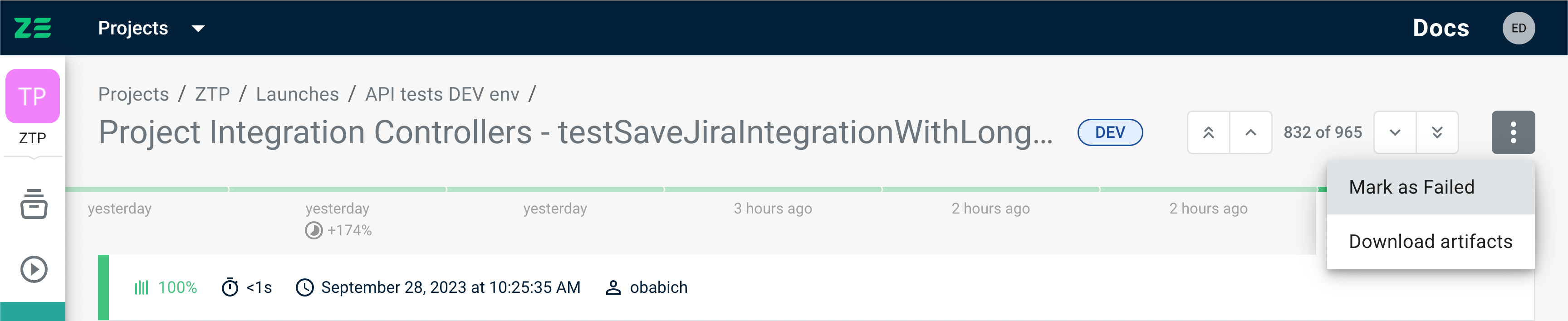

Execution history#

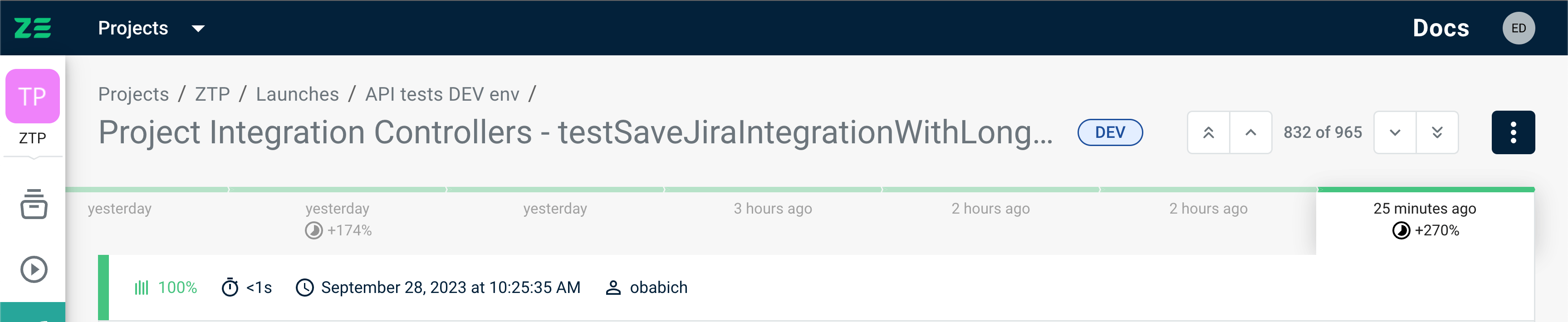

It can be useful to check the history of test results and have the ability to compare them by different criteria, thus easier identifying flaky tests and tracking any changes in their behavior.

Execution history panel at Zebrunner allows users to see and analyze the results of the last 10 executions of the same test (on the basis of its test function), compare the duration and the attached artifacts for more thorough analysis.

This way, it’s possible both for the current test and previous ones to check extended details like test artifacts (logs, screenshots, videos, etc.) and attached labels, link failures to Jira issues, mark as passed or failed for deeper test results understanding.

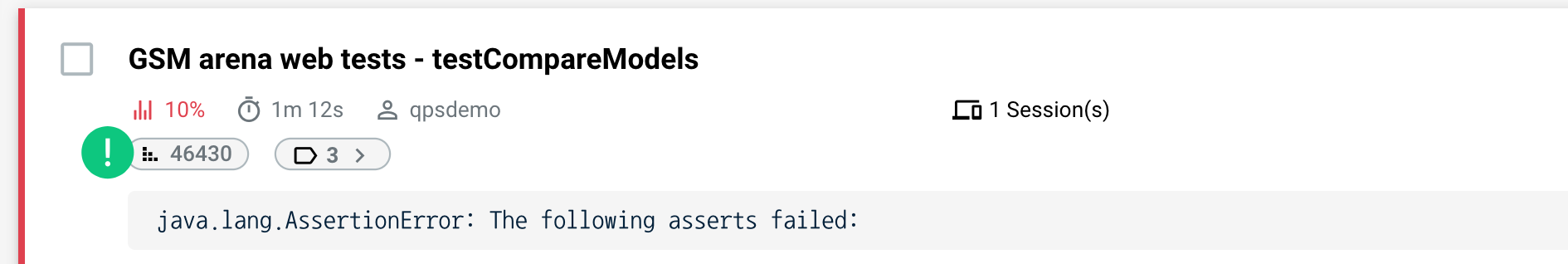

Execution time deviation in percent#

In some tests on the Execution history panel, you can see the icon with a negative or positive time value in percents. If there is a certain deviation in the average execution time of the tests in the history (more than twice), it is displayed with this icon and deviation in percents (- or + depending on whether it’s more or less than average).

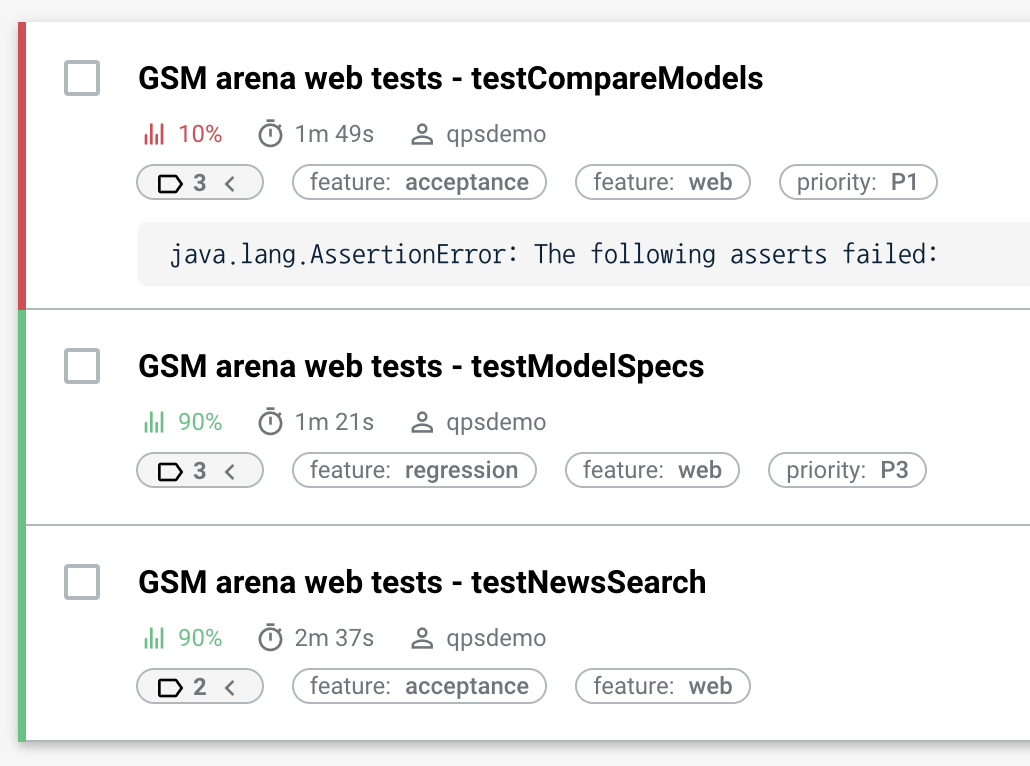

Stability indication#

In addition to Execution history, Zebrunner provides the Test stability rate feature. It allows you to understand if your tests can be considered stable and unstable on the basis of the latest 10 execution results (or Execution history, in other words).

With this functionality, it’s easier to understand when to refactor or fix the test code to get more stable test results.

The stability rate can be checked for every test on the Launch view with a percent specification that means:

- From 0 to 69% — test is often failing (marked red)

- From 70 to 89% — test is unstable and can possibly be flaky (marked yellow)

- From 90 to 100% — test is passing stable (marked green)

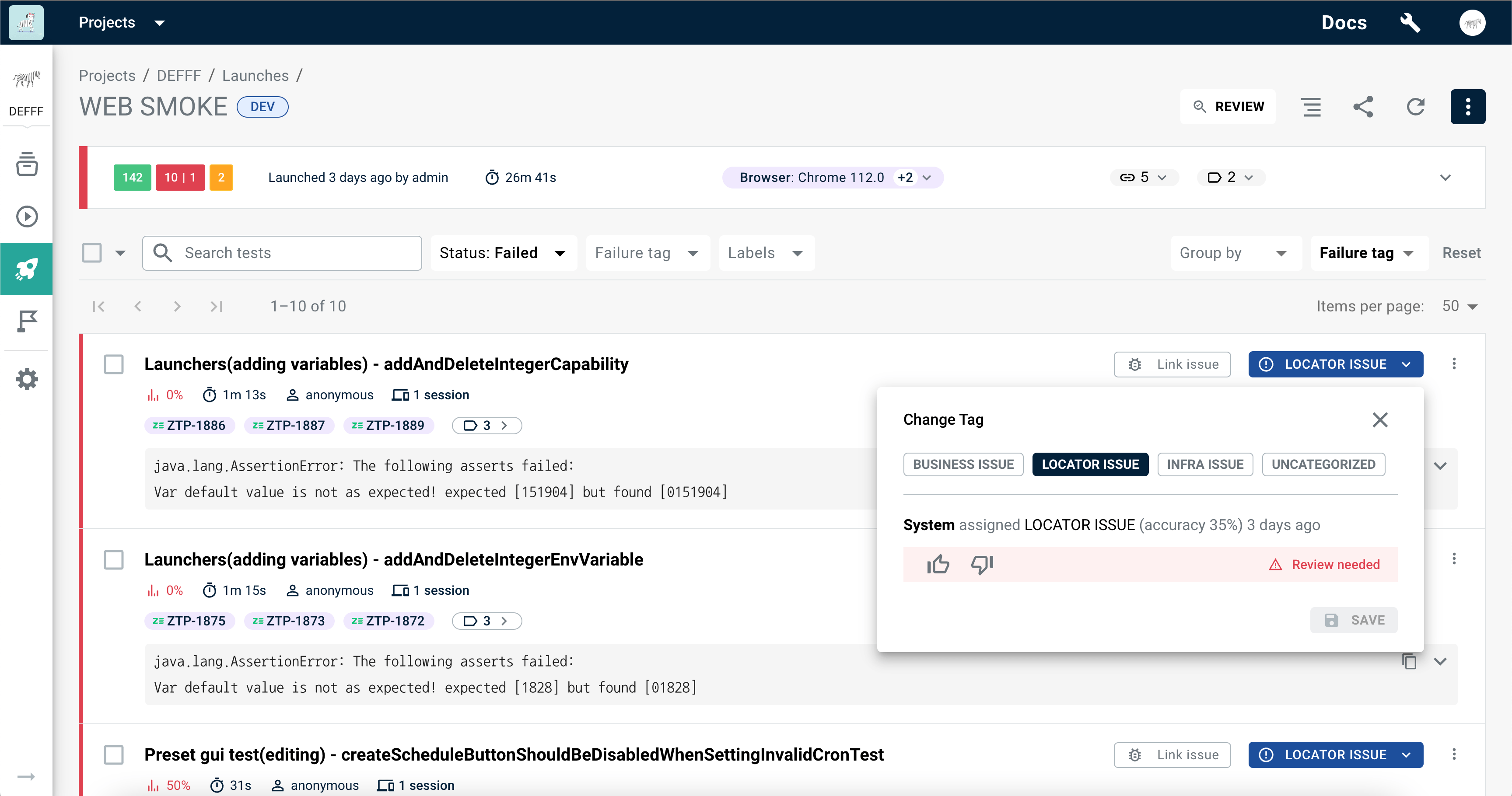

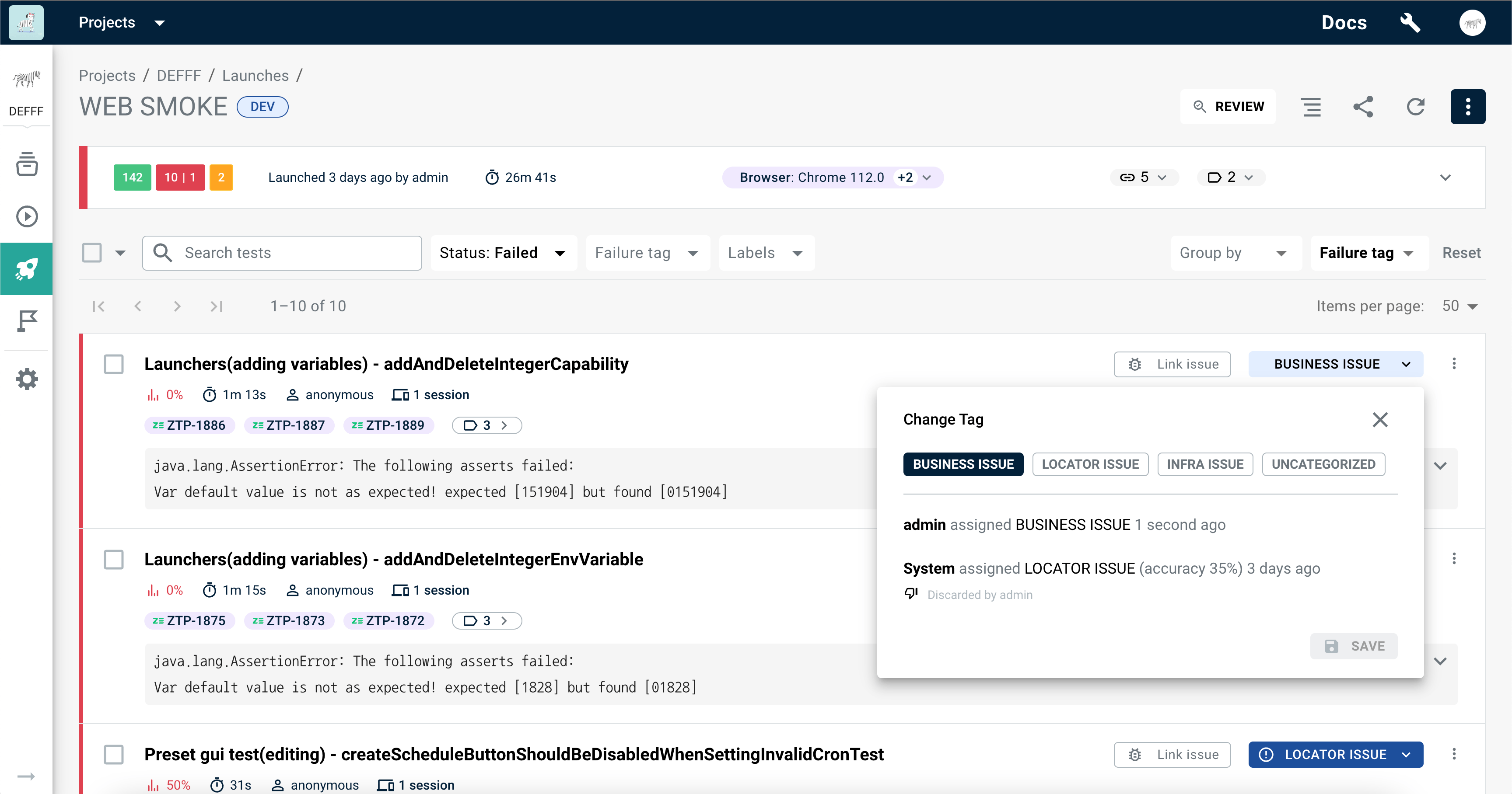

AI/ML results classification#

Zebrunner’s AI/Machine Learning-based mechanism automatically categorizes failure reasons by stack traces, helping QA engineers save time on test analysis and prioritize daily activities.

By default, there are 4 main failure reasons detected by Zebrunner’s AI/ML:

- BUSINESS ISSUE – a potential bug/defect in your application under test.

- LOCATOR ISSUE – one of the most popular failures related to UI locator or layout changes.

- INFRA ISSUE – a problem with 3rd party infrastructure components like network, computer, framework (Selenium, Appium, etc.), application, database, etc.

- UNCATEGORIZED – the default tag for an unknown failure that is not yet marked by the system or human.

After your test is failed or skipped, Zebrunner’s AI/ML automatically detects the failure reason with a certain level of accuracy (up to 100%).

If the failure reason cannot be associated with any of the above-mentioned categories, or AI/ML requires additional training, the UNCATEGORIZED tag is added. It means the failure reason needs additional investigation and human actions.

The AI classification is performed on a test finish, with a small delay possible. If during this delay, a user assigns the failure tag first, their assignment will always take precedence, while the system assignment will be ignored.

If the AI/ML accuracy ratio is less than 90%, a special exclamation mark will appear beside the failure label. This means that AI/ML needs the user’s attention to check the correctness of the classification. The more rounds of human training the system has undergone, the more accurate the results will be.

To change the category assigned by AI/ML, simply select the most suitable one in the modal and click Save.

Assigning failure tag to multiple tests at once

If you want to assign a failure tag to tests in bulk, select them using checkboxes. Once you've made your selection, you'll find the bulk action Assign failure tag at the top of the grid. Click it and follow the prompts.

The new failure tag will override other tags assigned to tests. If the new tag matches the tag that was assigned by AI/ML, an upvote will be given to AI/ML. In the case of a match with a user-assigned tag, no new assignment will occur, and the action will be ignored.

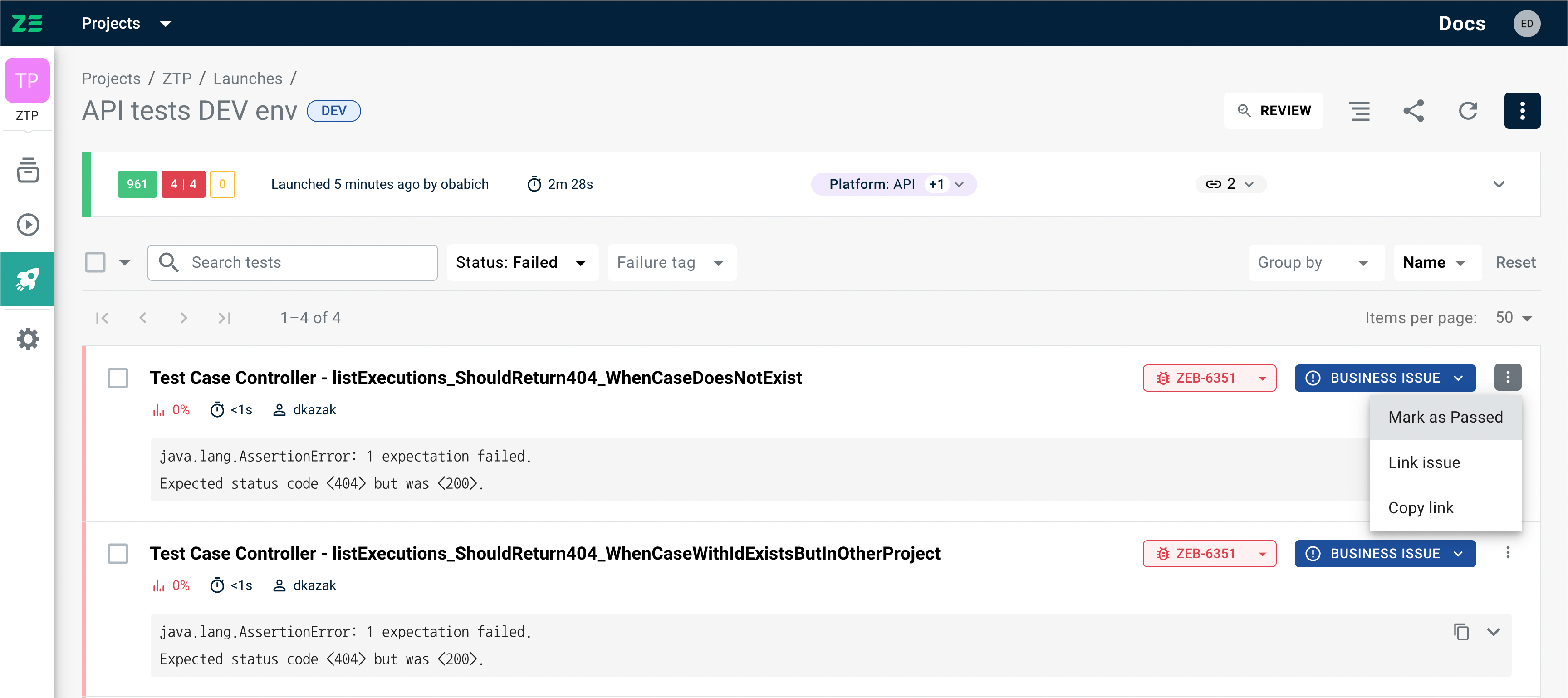

Updating result status#

If, after reviewing the test artifacts, a user understands that the test is false positive (or negative), they can manually change the test status to the corresponding one.

To do this, perform the following steps:

- On the Launch view, pick a test and go to More Options on the right

- Press Marked as passed (or Mark as failed) and confirm the action

Info

Availability of Marked as passed/Marked as failed option depends solely on the current test status

Alternatively, test status can be updated on the Test view by selecting it in the dropdown in the upper right area of the screen:

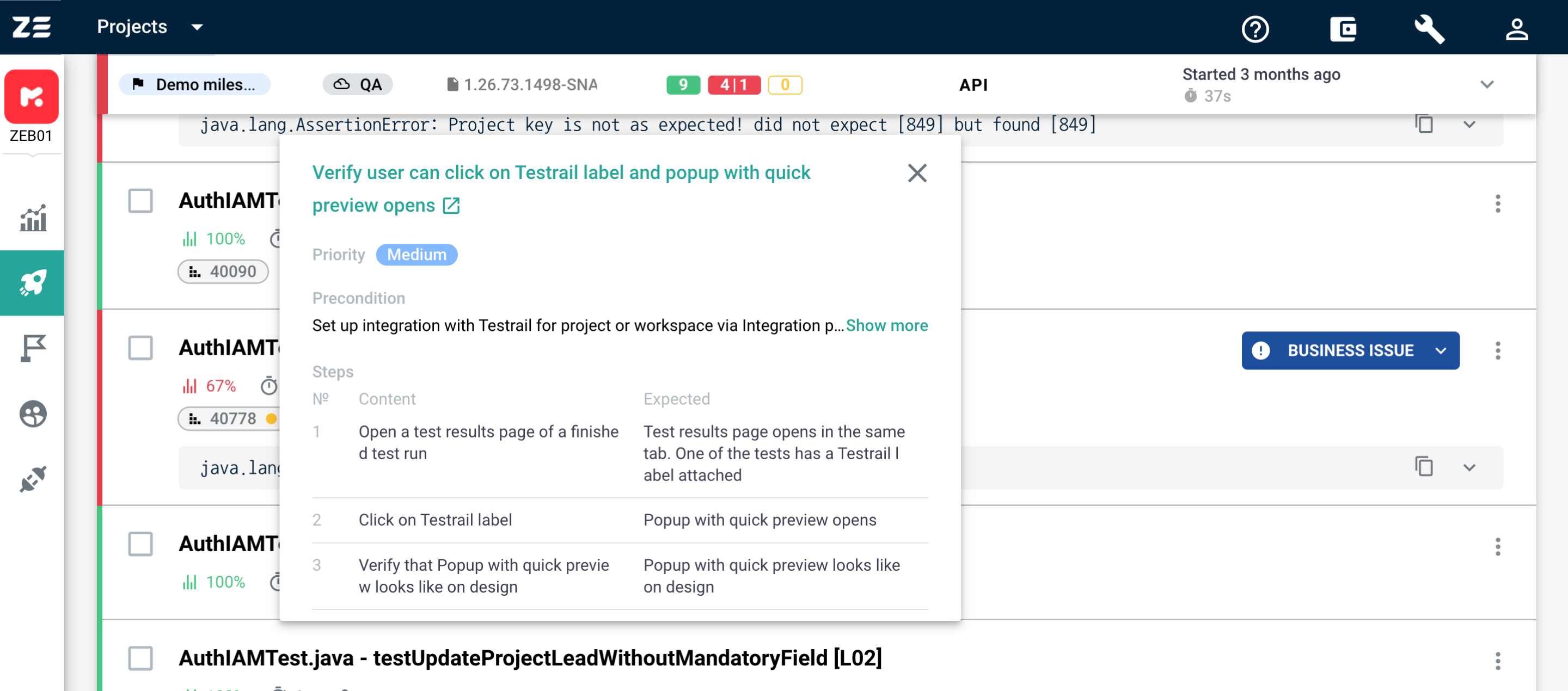

Test cases linking#

One of the most useful Zebrunner features is the ability to keep correlation between manual test cases and automated tests. This is possible thanks to the integrations with popular test case management tools such as TestRail, XRay, Zephyr.

After integrating Zebrunner with TestRail, XRay, Zephyr, users can:

- Link automated tests to test cases in the chosen TCM system

- Preview the linked test cases in Zebrunner UI

- Push automated test execution results to corresponding test cases in the chosen TCM system (more on this here)

After configuring the integration with TestRail, XRay, Zephyr, test cases from these systems linked to tests at Zebrunner are displayed on the Launch view as special labels.

After clicking on the label, test case preview is shown with a brief description of the test case (including the test case name, priority, preconditions and steps), so no need to look for the corresponding test case in the TCM system.

When the preview is displayed, users can access the linked test case in the TCM tool in a click.

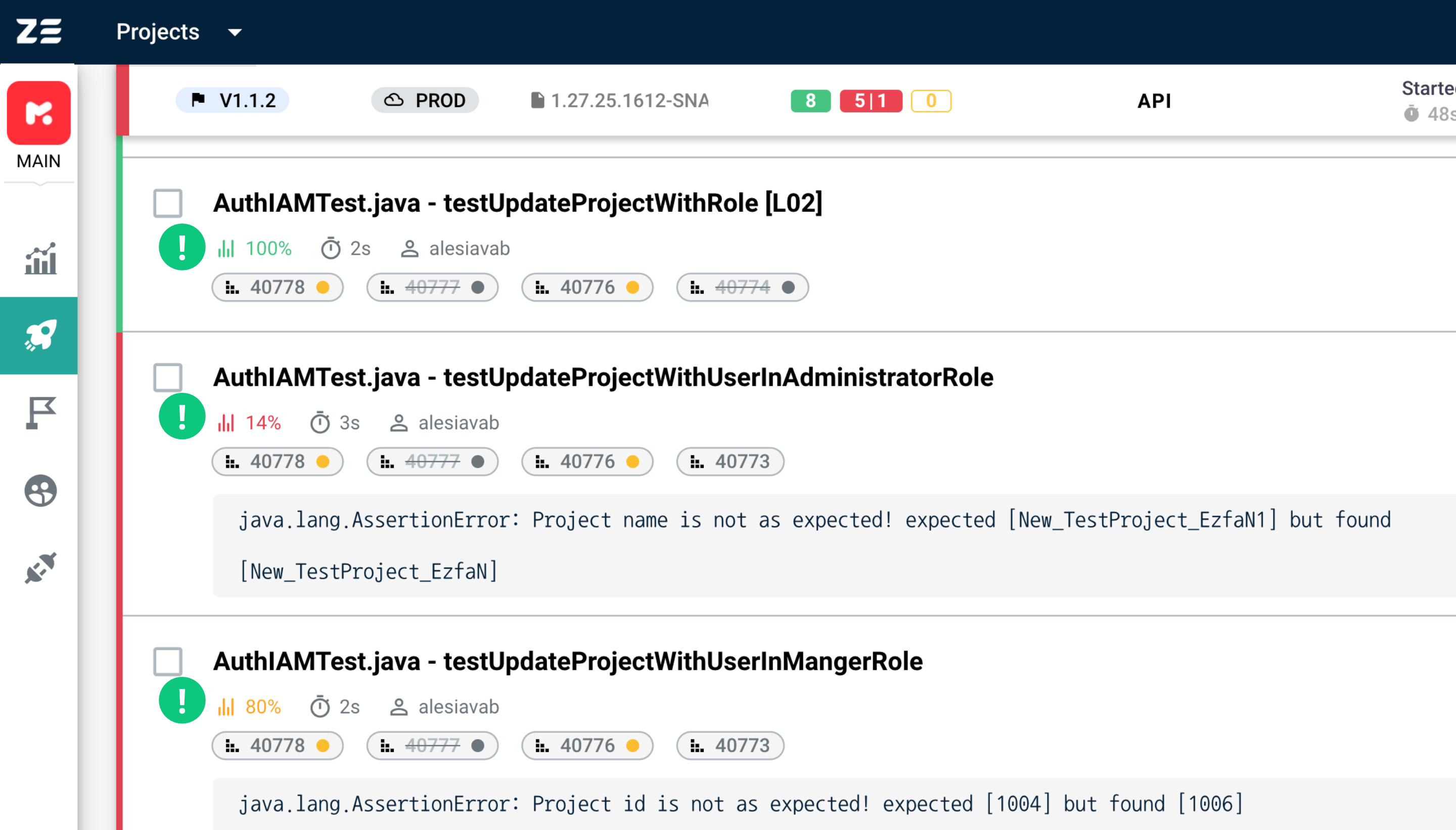

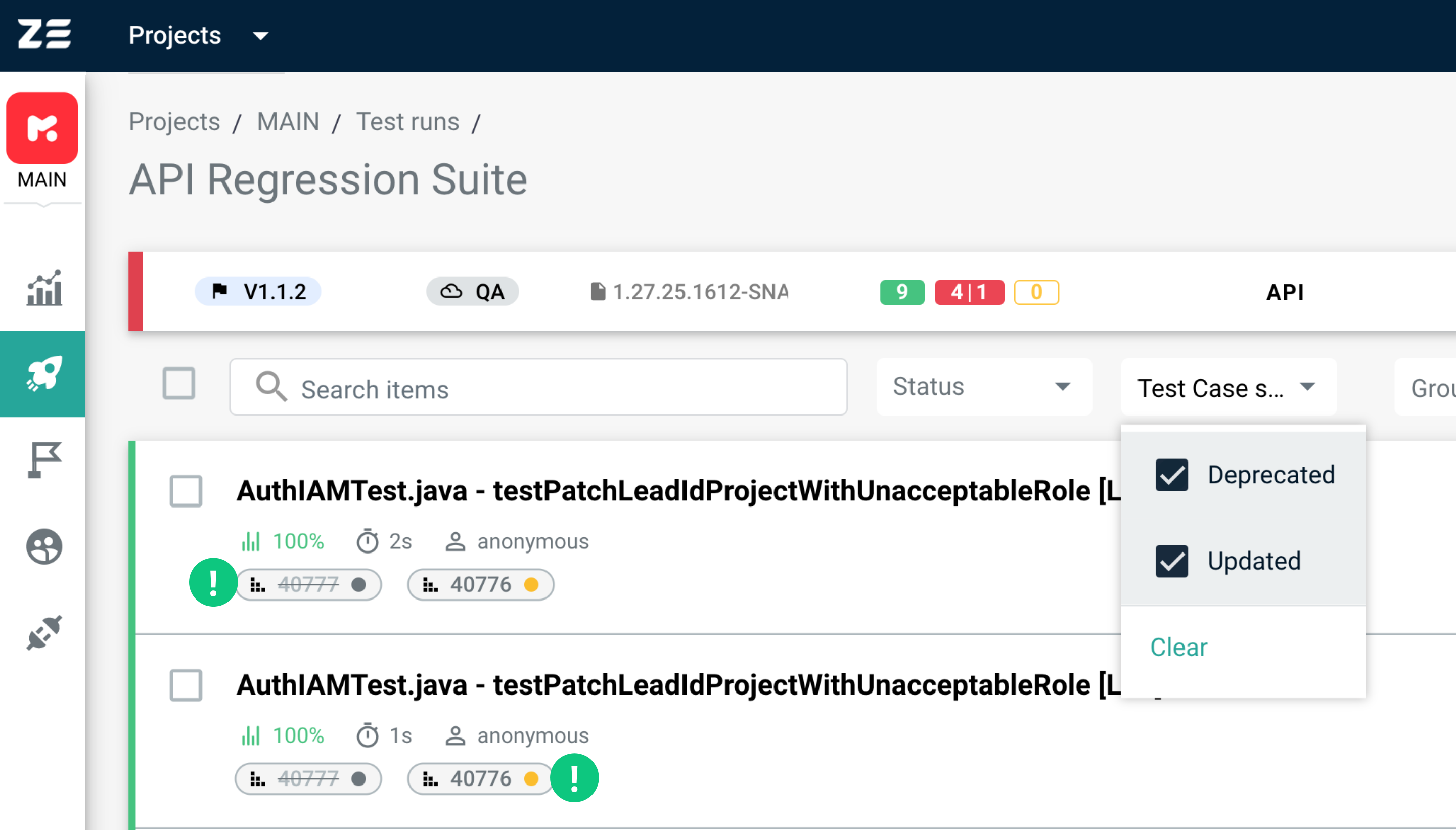

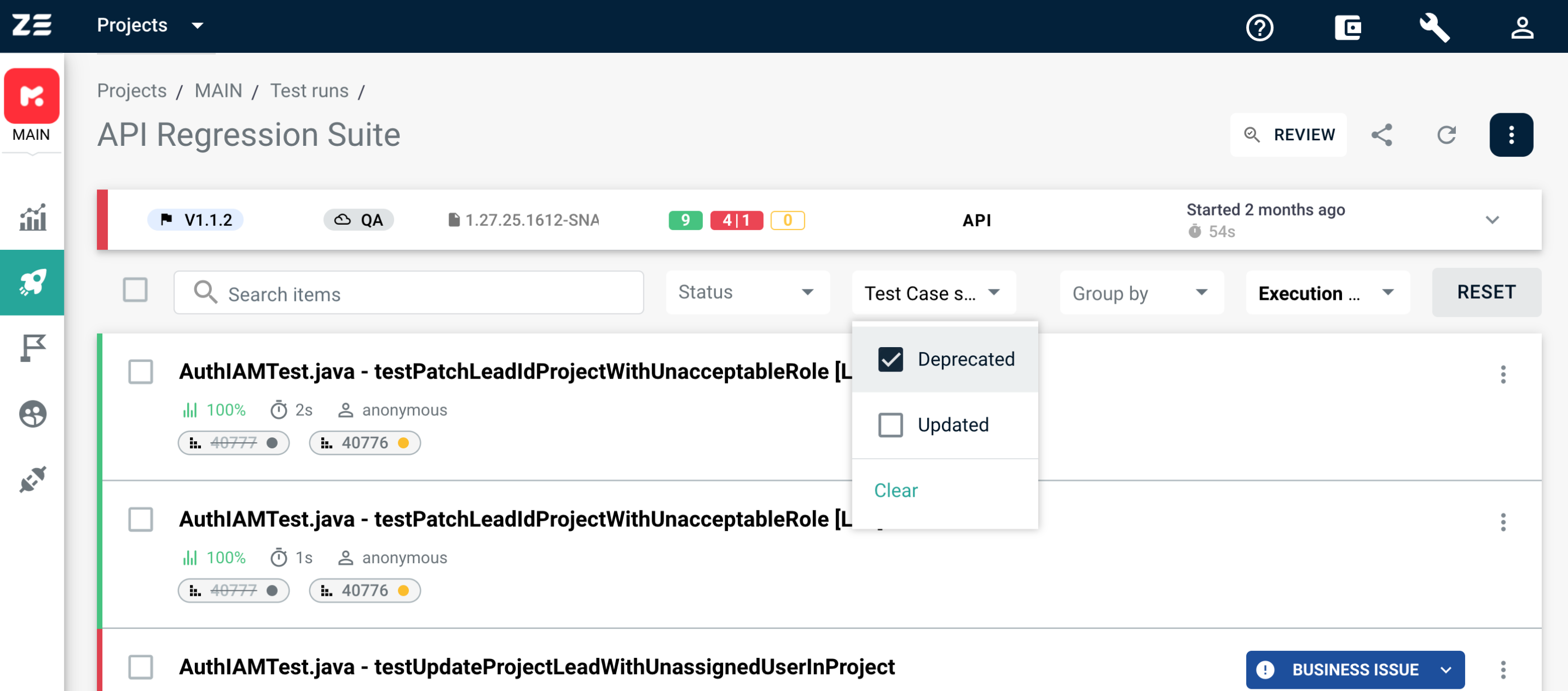

Updated and Deprecated test case labels for TestRail#

TestRail users also have the ability to mark test cases as Updated or Deprecated with the help of the corresponding labels.

This is an advanced notification mechanism for Test Automation engineers that helps sync manual test case statuses in TestRail with automated tests in Zebrunner by using special labels.

There are 2 types of notification labels that can be configured:

- Update labels with an orange dot next to the case ID, informing that the entire test case or some of its steps were updated in TestRail

- Deprecation labels with a grey dot and the strikethrough case ID, meaning that the test case is no longer valid and is retired

The notification may have an optional message that can provide extra change context for automation engineers. That, and notification type itself, will allow test automation engineers to perform the required changes on their side.

Info

To learn more about the configuration of this functionality, go to the TestRail integration guide

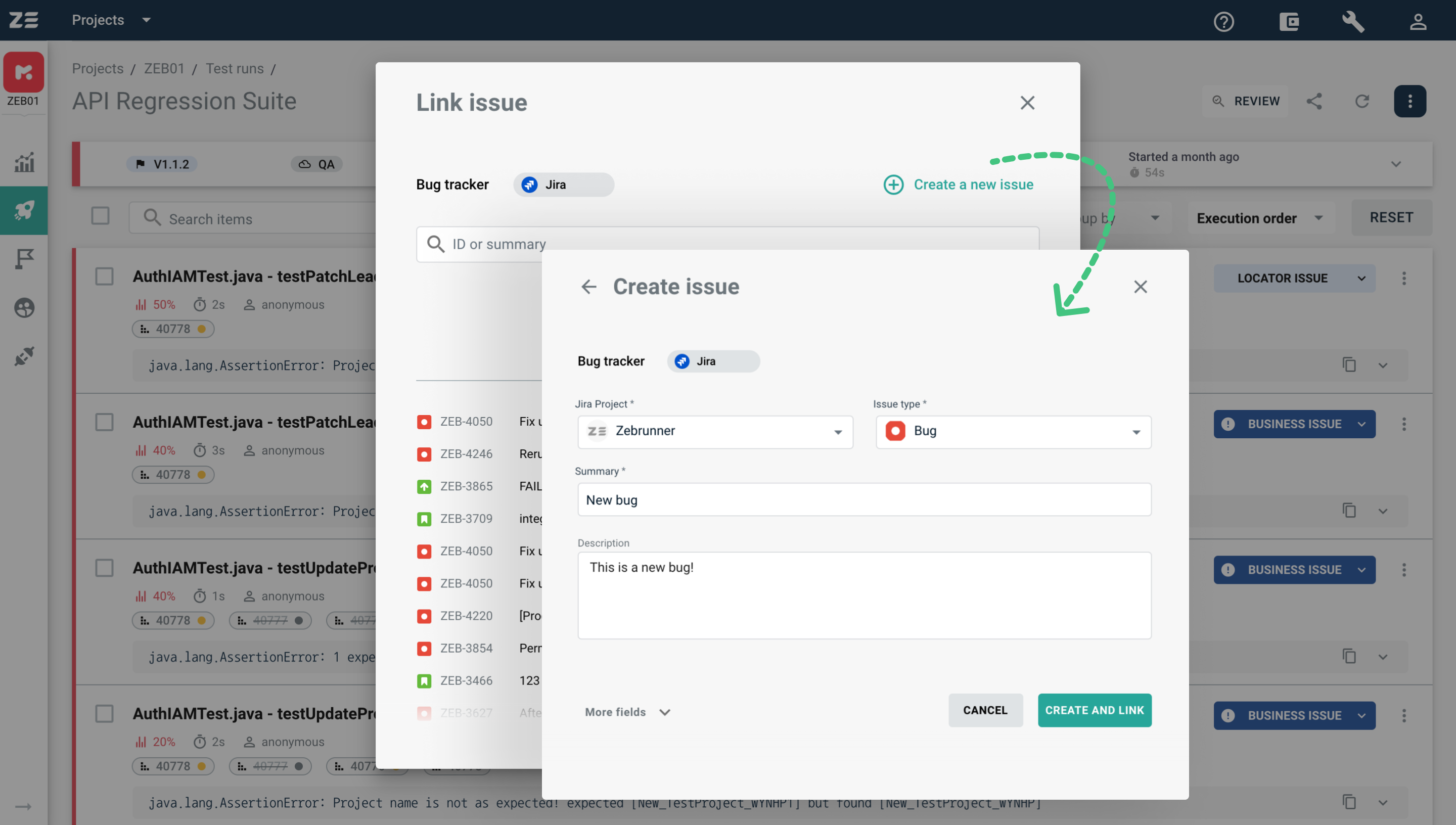

Issue linking#

When a test failure is caused by a bug/issue/defect in the application, you may want to file it in a bug tracking system and keep a correlation between it and the failed test for the sake of traceability.

Zebrunner can help you to keep track of those links and even to create issues directly from Zebrunner UI in one of the integrated systems.

How it works: If after linking the failure to Jira issue, Zebrunner identifies a similar stack trace of the same test, and this issue is not yet in the closed status in Jira, then this failure will be automatically linked to the issue, and will be counted separately in the launch statistics.

As soon as the Jira issue is closed or unlinked manually, the linking will not be performed automatically, but will be displayed for the previous tests in order to keep the history.

Info

In order for this feature to work properly, make sure that you configured the Jira integration

There are two ways to link a failure to Jira issue: link to existing Jira issue and create a new Jira issue directly from Zebrunner.

Link an existing Jira issue#

To link a Jira issue to a test, perform the following steps:

- Go the Launch view, click More Options on the right of the needed test

- Select Link issue, the Link issue dialogue will appear

- Start entering the issue ID or summary to the search field

- Choose the needed issue and click Link

The linked issue will be added to the linking history together with the corresponding details.

A red issue label will be added to the test, and the number of known issues in test statistics at the top will be updated as well. You can quickly access the linked issue in Jira by clicking the corresponding Jira label.

Create and link a new Jira issue from Zebrunner#

Besides the possibility to link an already existing Jira issue to a test, it’s possible to create different kinds of new Jira issues right from Zebrunner.

To do this, perform the following steps:

- Go the Launch view, click More Options on the right

- Select Link issue, the Link issue dialogue will appear

- Click Create a new issue

- Define the Jira project, issue type, and fill in the required fields

- Click Create and link

The issue will be automatically added to Jira with the provided info, and linked to the chosen tests. You can access the newly created issue in Jira by clicking the corresponding Jira label.

Info

Some Jira projects may have the required fields that are not supported by Zebrunner. In this case, the issue in Jira will not be created. Please contact us at support@zebrunner.com to add your required field to the system

This functionality is also available from the Test view.

Labeling tests#

Zebrunner allows to label tests and use those labels for further grouping of tests and results analysis in the system. Labels can be seen both on Test view and Launch view (collapsed by default).

At the moment of writing, labeling is not possible via UI and can be done only directly from test code. For more information on reporting APIs allowing to do that, see reporting section of the documentation.

Tip

In order to expand (or collapse) labels for all tests in the launch on the Launch view, go to More Options in the upper right corner and select Show labels (or Hide labels).

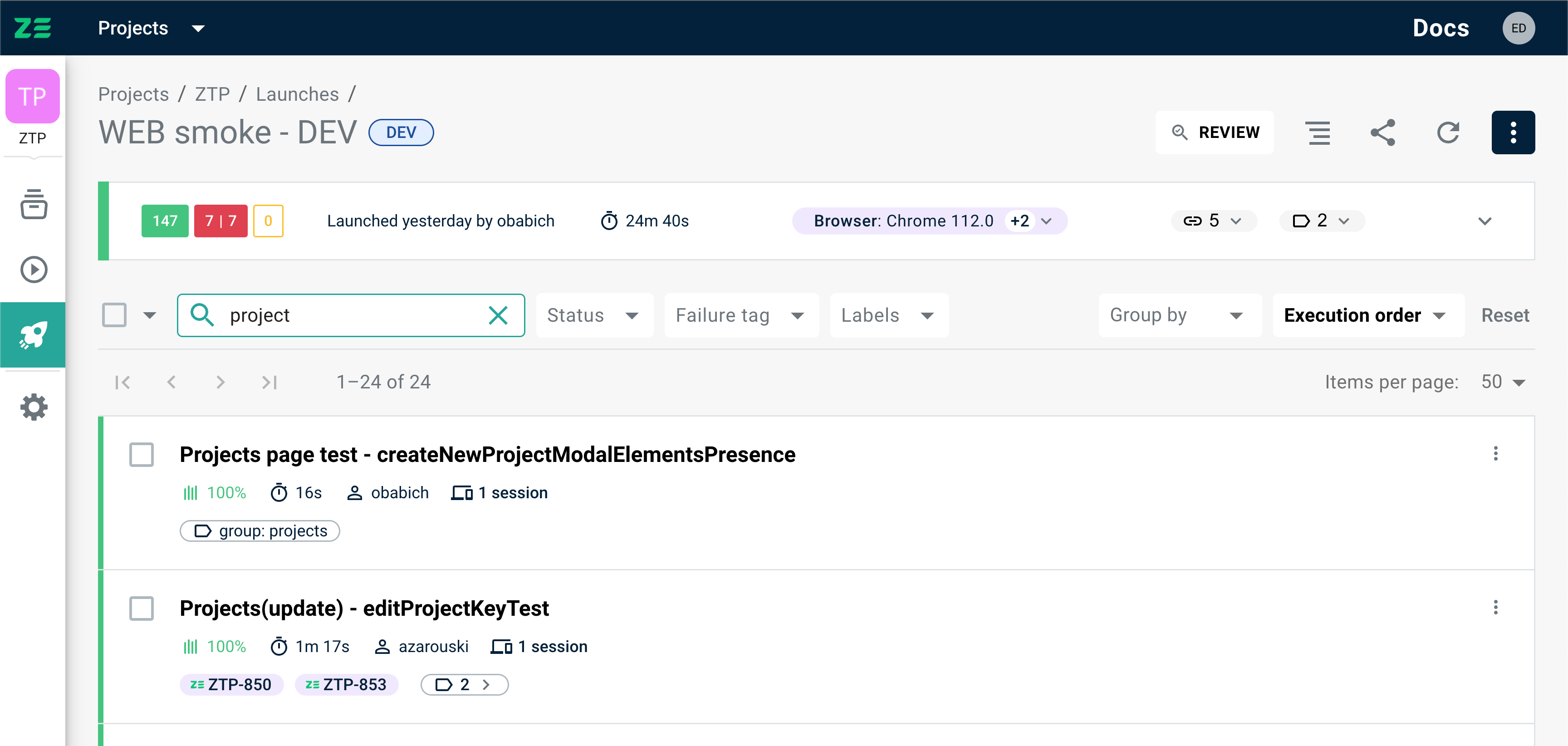

Filtering, sorting and grouping#

The Launch view contains all the results of the tests executed within a particular launch. The more tests were performed inside one launch, the more difficult it can be to manage them and analyze. To simplify this process, Launch view has filters allowing users to search, group and sort tests on the grid according to different criteria.

On the Launch view, you can search by test name and stack trace via the Search field.

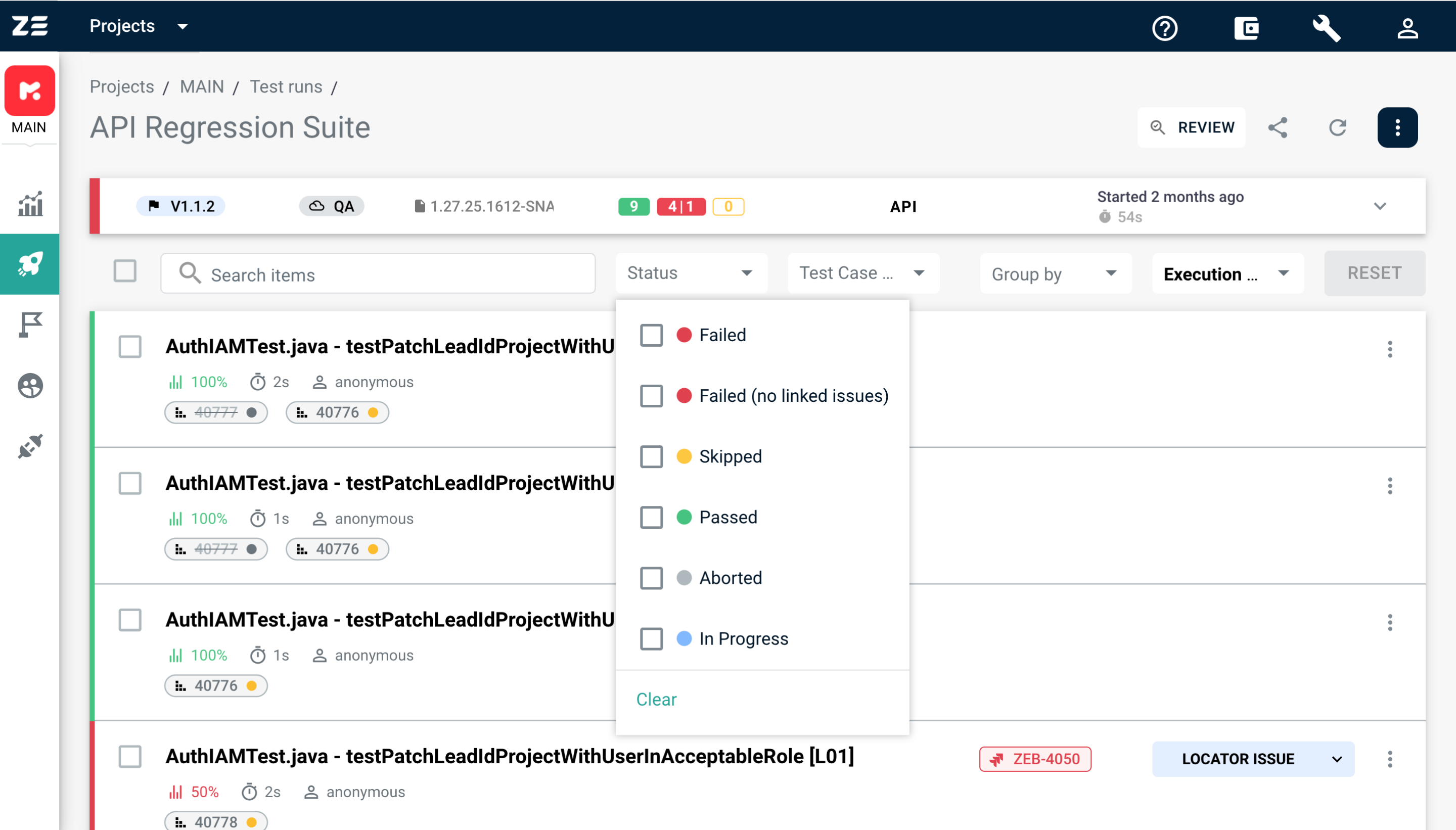

You can filter tests by status (failed, failed (no linked issues), skipped, passed, aborted, in-progress).

You can also filter tests by the test case state (TestRail only for now) — Updated or Deprecated (more about this feature here).

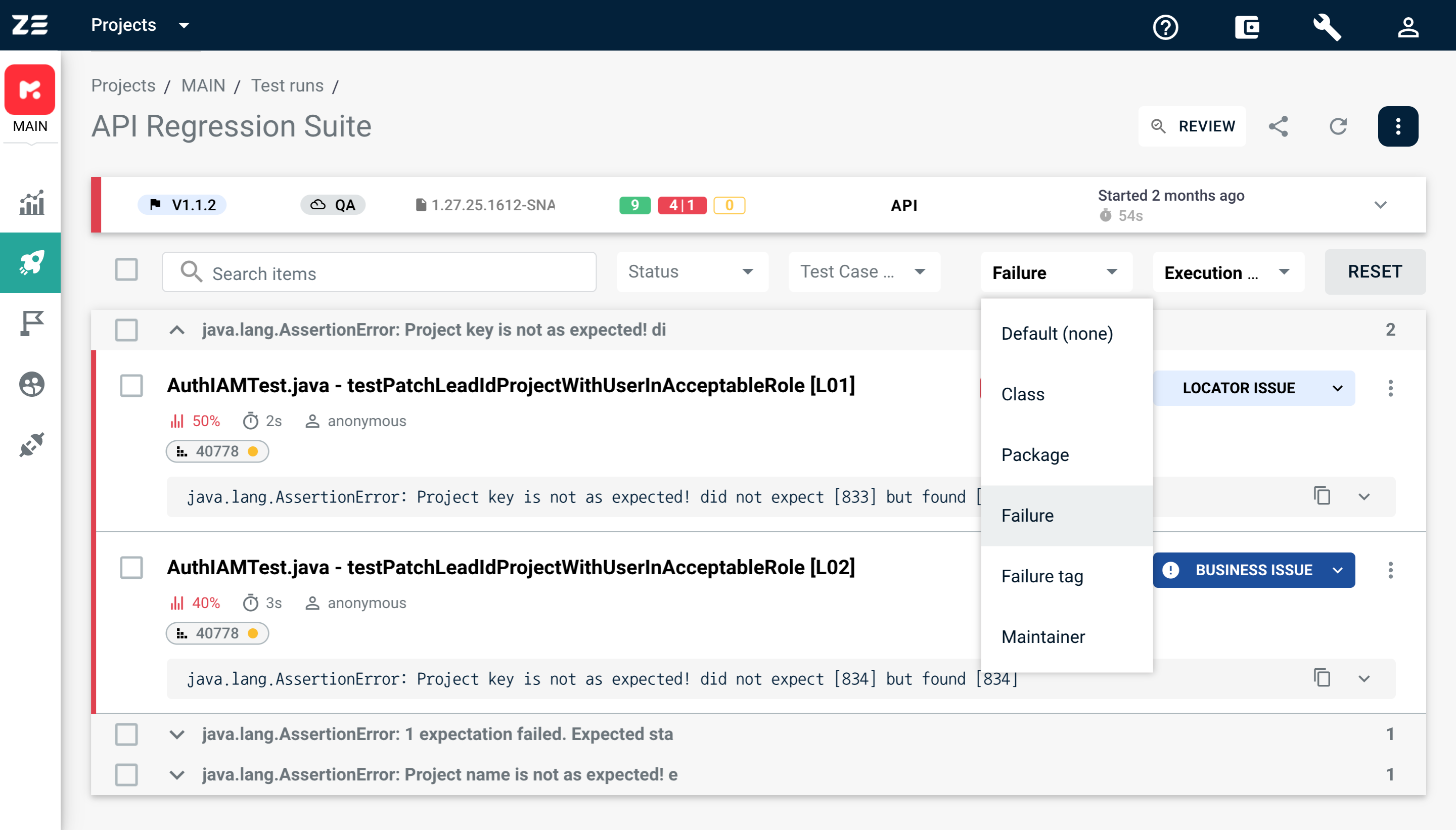

You can group your tests by:

- Class

- Package

- Label (e.g. test case ID in TCM, feature, priority, etc. set in your code)

- Failure (stack trace)

- Failure tag (AI/ML-set categories)

- Test maintainer

To group your tests by any of these criteria, choose the necessary option from the Group by drop-down.

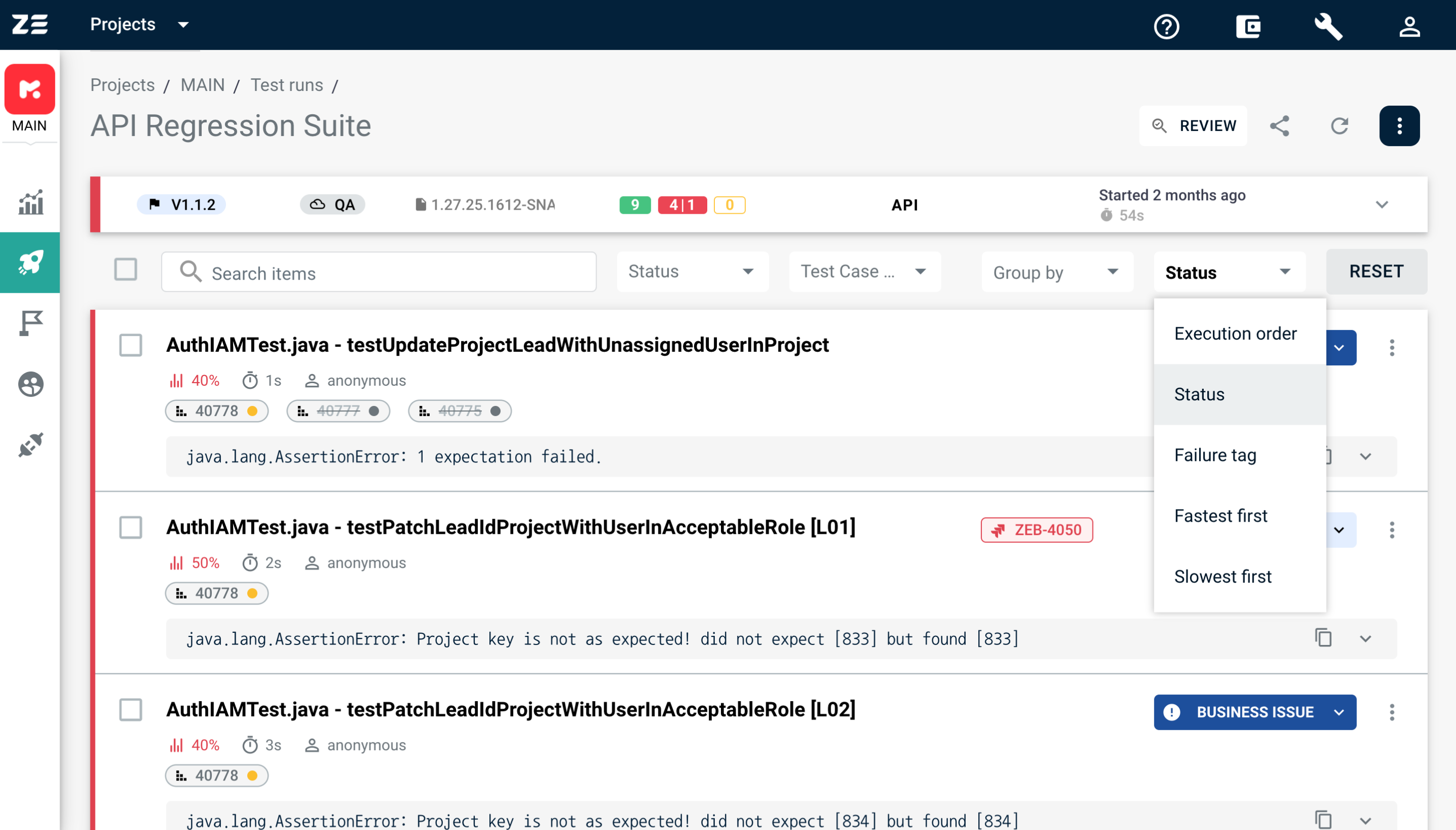

You can also sort tests by:

- Execution order (by default)

- Status (failed, skipped tests at the top, followed by passed)

- Failure tag (AI/M-set categories)

- Fastest first

- Longest first

To sort your tests by any of these criteria, choose the necessary option from the Sort by drop-down.

Info

You can apply a search option, group and sort the tests simultaneously. Press Reset to come back to the default view.

Navigation between tests#

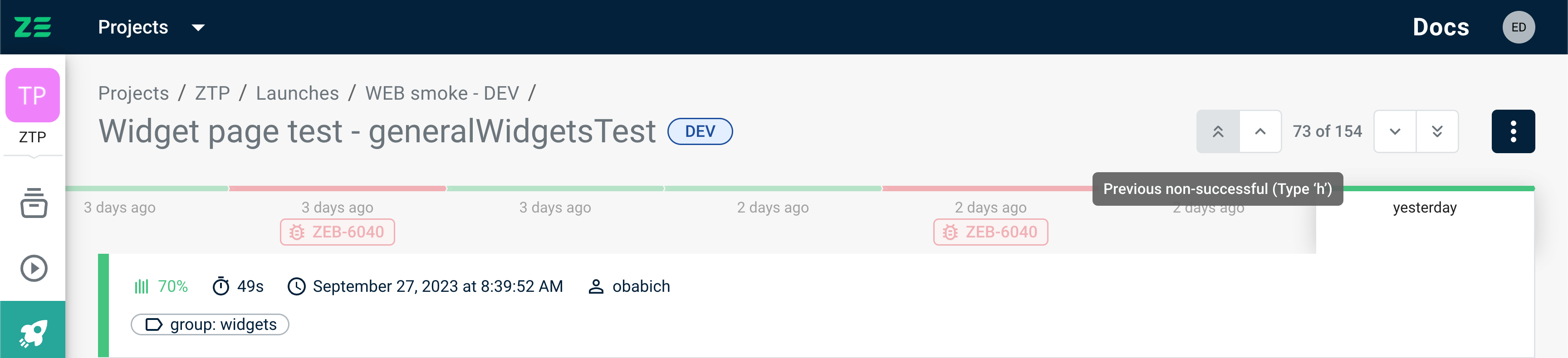

Zebrunner offers seamless navigation between test executions within automation launch.

The navigation can be performed via designated controls on the UI or using keyboard shortcuts:

- 'h' or : navigate to the previous non-successful test

- 'j' or : navigate to the previous test

- 'k' or : navigate to the next test

- 'l' or : navigate to the next non-successful test

A non-successful test means a test execution with any status other than PASSED.

The tests you can navigate to constitute a navigable set of tests. This set is built from the tests visible on the Launch page. That means if you use filters, sorting, or grouping criteria on the Launch page, these criteria shape the tests in the navigable set.

If you decide to share a direct link to a test after applying filters, sorting, or grouping, the link will encompass all applied criteria, not the tests included in the navigable set. In cases where a test you originally intended to access via link is no longer a part of the navigable set, or if no filters, sorting, or grouping were applied, the navigable set will include all launch tests, sorted in the default order.

Any updates made to tests on the Test page do not affect the navigable set.

Example: You are on the Test page, viewing details of a failed test, and the navigable set includes only those tests that have failed. Change the status of the test to PASSED, navigate to the next test , and then navigate to the previous non-successful test . As a result, the last navigation takes you to the test that has just been marked as PASSED — the test was originally failed when the navigable set was created, and the navigable set remains unchanged.